ERROR hdfs.KeyProviderCache: Could not find uri with key [dfs.encryption.key.provider.uri] to create a keyProvider !!

java.lang.IllegalArgumentException: Wrong FS: hdfs://master:9000/tmp/tags/part-00000-b53ea587-a49e-4bfd-b952-0653aef45ada.snappy.parquet, expected: file:///

at org.apache.hadoop.fs.FileSystem.checkPath(FileSystem.java:649)

at org.apache.hadoop.fs.RawLocalFileSystem.pathToFile(RawLocalFileSystem.java:82)

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:606)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:824)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:601)

at org.apache.hadoop.fs.FileSystem.isDirectory(FileSystem.java:1439)

at org.apache.hadoop.fs.ChecksumFileSystem.rename(ChecksumFileSystem.java:606)

at org.apache.hadoop.hive.ql.metadata.Hive.moveFile(Hive.java:2632)

at org.apache.hadoop.hive.ql.metadata.Hive.replaceFiles(Hive.java:2892)

at org.apache.hadoop.hive.ql.metadata.Hive.loadTable(Hive.java:1640)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.sql.hive.client.Shim_v0_14.loadTable(HiveShim.scala:716)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$loadTable$1.apply$mcV$sp(HiveClientImpl.scala:672)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$loadTable$1.apply(HiveClientImpl.scala:672)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$loadTable$1.apply(HiveClientImpl.scala:672)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$withHiveState$1.apply(HiveClientImpl.scala:283)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:230)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:229)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:272)

at org.apache.spark.sql.hive.client.HiveClientImpl.loadTable(HiveClientImpl.scala:671)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$loadTable$1.apply$mcV$sp(HiveExternalCatalog.scala:741)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$loadTable$1.apply(HiveExternalCatalog.scala:739)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$loadTable$1.apply(HiveExternalCatalog.scala:739)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:95)

at org.apache.spark.sql.hive.HiveExternalCatalog.loadTable(HiveExternalCatalog.scala:739)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.loadTable(SessionCatalog.scala:319)

at org.apache.spark.sql.execution.command.LoadDataCommand.run(tables.scala:302)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:58)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:56)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:74)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:135)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:113)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:87)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:87)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:185)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:64)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:592)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:699)

... 64 elided

spark-shell 执行 hc.sql ("load data inpath '/tmp/tags' overwrite into table tags") 出错,怎么解决?

回复数量: 12

-

牛牛们,救我

-

在线等

-

Please help me, I am scraching my head since last week and still no luck.

-

环境是spark2.1.0,但是我用的是1.x的语法

-

读的是本地文件吧

-

@青牛 不是,是hdfs上的数据,代码如下:

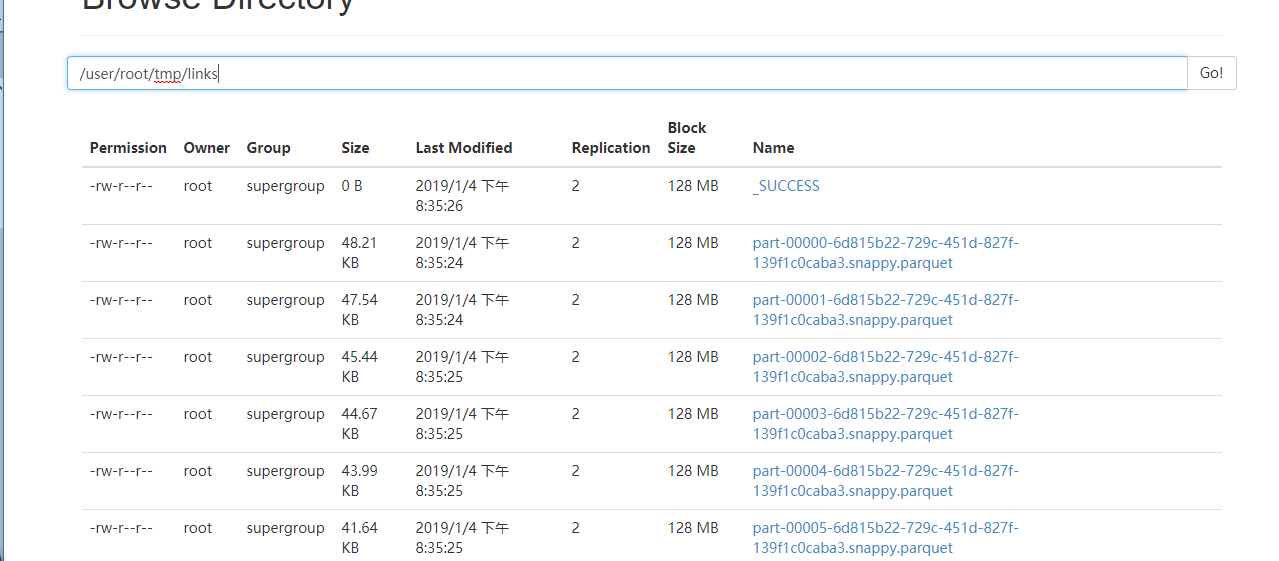

def main(args:Array[String]){ val localclusterURL="local[2]" val clusterMasterURL="spark://master:7077" val conf=new SparkConf().setAppName("ETL").setMaster(clusterMasterURL) val sc =new SparkContext(conf) val sqlContext=new SQLContext(sc) val hc =new HiveContext(sc) import sqlContext.implicits._ //设置RDDdepartions的数量一般以集群分配给应用的cpu核数的整数被为宜 val minPartitions= 8 //links val links = sc.textFile("data/links.txt", minPartitions).filter {!_.endsWith(",")} .map(_.split(",")) .map(x => Links(x(0).trim.toInt, x(1).trim.toInt, x(2).trim().toInt)) .toDF() links.write.mode(SaveMode.Overwrite).parquet("/tmp/links"); hc.sql("drop table if exists links") hc.sql("create table if not exists links(movieId int,ImdbId int,tmdbId int ) stored as parquet") hc.sql("load data inpath '/tmp/links' overwrite into table links") -

@青牛

是我的hive集群没有搭建好还是什么原因嘛?

hive是照着这个博客配的

https://blog.csdn.net/lovebyz/article/details/83346458?from=singlemessage&isappinstalled=0 -

@sourtanghc 路径加上hdfs试试,比如hdfs://nm/xxx

-

@青牛 加了,现在是这个错:

java.lang.IllegalArgumentException: Wrong FS: hdfs://master:9000/user/root/tmp/links/part-00000-6d815b22-729c-451d-827f-139f1c0caba3.snappy.parquet, expected: file:///

-

@sourtanghc hdfs协议是不是写错了,看看hdfs-site.xml里配置的是啥

-

parquet("/tmp/links") 这样写是本地路径 如果想存到hdfs应该这么写parquet('hdfs://XXX:9000/XXX')

-

@青牛 老师,hive-site.xml是这样的,我该怎么配置啊,试了好多博客了,我的环境是zookeper+hive1.2.1+spark2.1.0+hadoop2.7.3