public class kafkaConsumer extends Thread {

private String topic;

public kafkaConsumer() {

super();

}

@Override

public void run() {

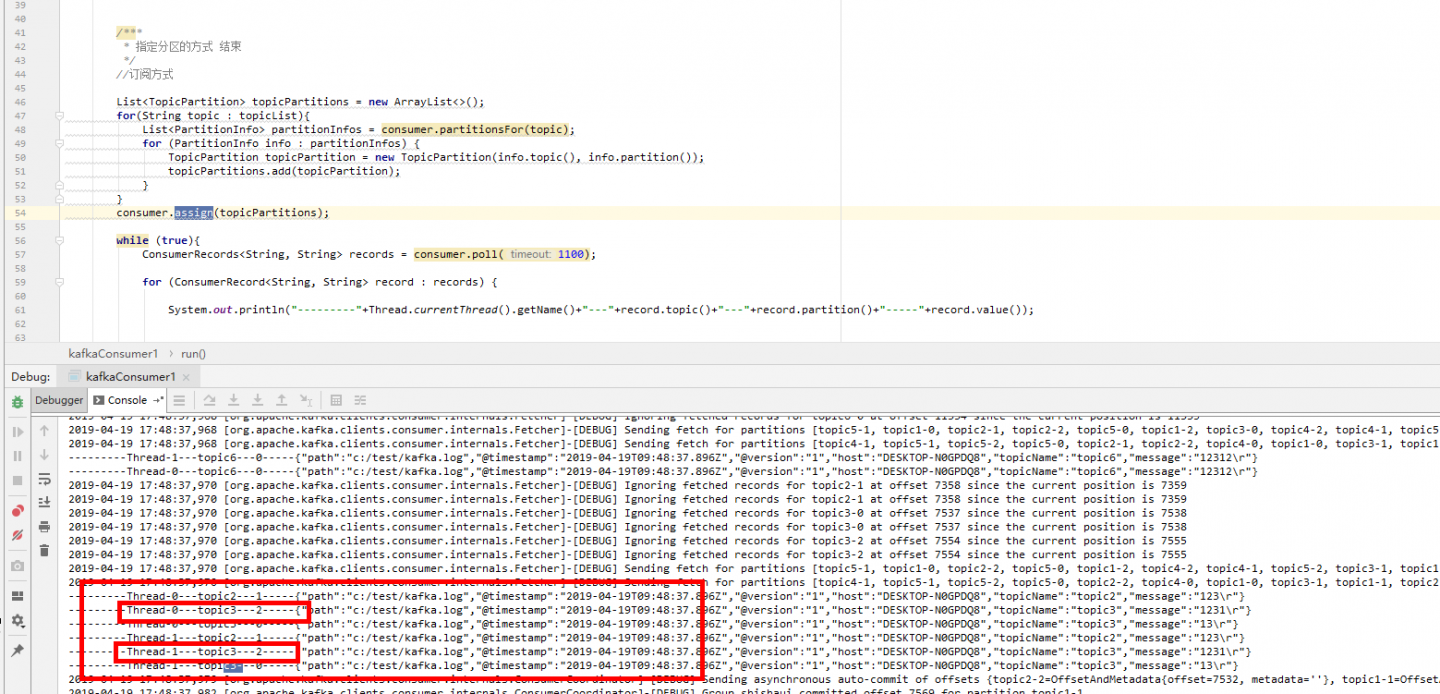

List<String> topicList = Arrays.asList("topic1","topic2","topic3","topic4","topic5","topic6");

KafkaConsumer consumer = createConsumer("aa");

List<TopicPartition> topicPartitions = new ArrayList<>();

for(String topic : topicList){

List<PartitionInfo> partitionInfos = consumer.partitionsFor(topic);

for (PartitionInfo info : partitionInfos) {

TopicPartition topicPartition = new TopicPartition(info.topic(), info.partition());

topicPartitions.add(topicPartition);

}

}

consumer1.assign(topicPartitions);

while (true){

ConsumerRecords<String, String> records = consumer.poll(1100);

for (ConsumerRecord<String, String> record : records) {

System.out.println("---------"+Thread.currentThread().getName()+"---"+record.topic()+"---"+record.partition()+"-----"+record.value());

}

try {

Thread.sleep(2000);

}catch (Exception e){}

}

}

private KafkaConsumer createConsumer(String groupName) {

Properties props = new Properties();

props.put("bootstrap.servers", "127.0.0.1:9092");

props.put("group.id", groupName);

props.put("enable.auto.commit", "true");

props.put("max.poll.records", "100");//原来500 减小为100

props.put("auto.commit.interval.ms", "1000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

return consumer;

}

public static void main(String[] args) {

new kafkaConsumer().start();// 使用kafka集群中创建好的主题 test

new kafkaConsumer().start();// 使用kafka集群中创建好的主题 test

}

}